Low-Cost, Open-Source Artificial Intelligence Models

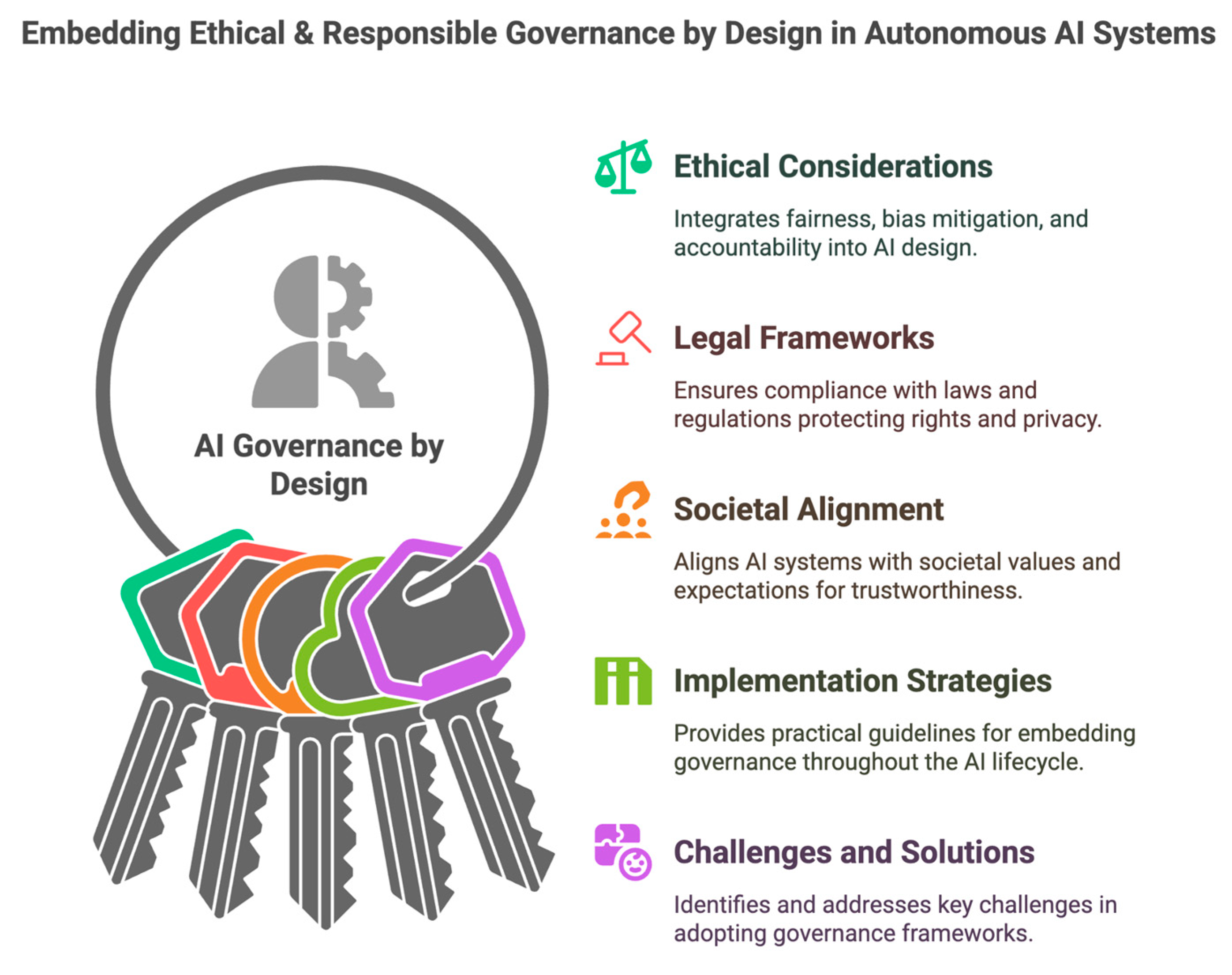

The Green and Sovereign Choice for Greece and Europe Europe’s artificial intelligence strategy stands at a structural inflection point. Dependence on hyperscale cloud infrastructures located outside the European Union increases systemic vendor lock-in, geopolitical exposure, and regulatory vulnerability. Simultaneously, the accelerating energy consumption of large AI infrastructures threatens Europe’s sustainability commitments and long-term competitiveness. The … Read more