Since last year we have tracked the development of Article 13 of the proposed Directive on Copyright in the Digital Single Market by publishing a series of flowcharts that illustrates its internal logic (or absence thereof). Now that there is a final compromise version of the directive we have taken another look at the inner workings of the article. The final version of Article 13 continues to be so problematic that as long as it remains part of the overall package, the directive as a whole will do more harm than good. This is recognised by an increasing number of MEPs who are pledging that they will vote against Article 13 at the final plenary vote.

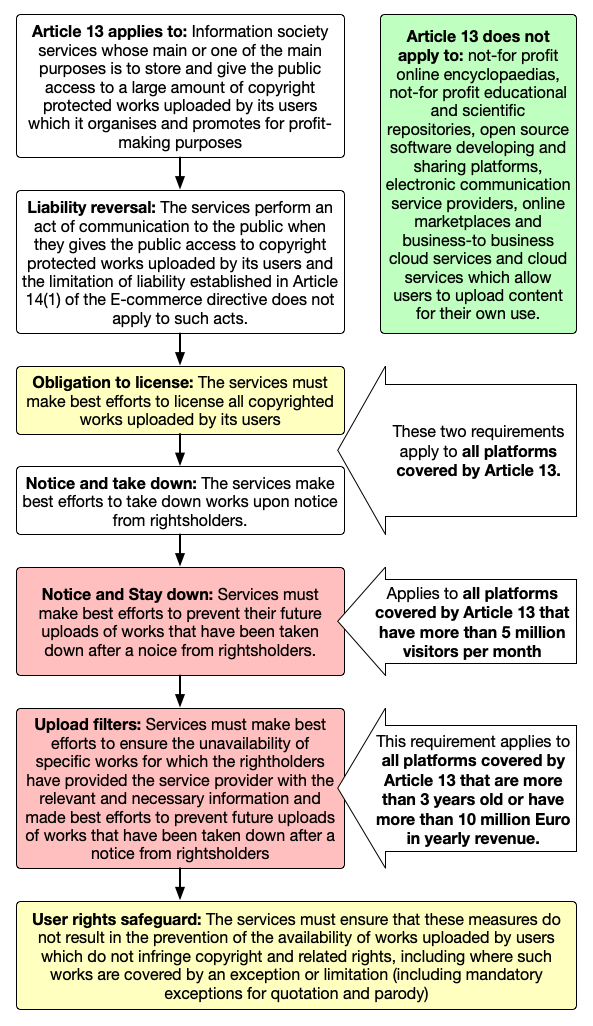

The flowchart below illustrates the main operative elements of Article 13. These include the definition of the affected services, the types of services that are explicitly excluded from its scope (the green box in the top right corner) and the reversal of the liability rules for the services covered by Article 13. It further details the obligations imposed on the services. These include an obligation to seek licenses for all copyrighted works uploaded by users (the yellow box) and the requirements to ensure the unavailability of certain works that will force platforms to implement upload filters (the two red boxes). The yellow box at the bottom contains the measures that platforms must take to ensure that the upload filters don’t negatively affect users’ rights.

The Scope: Broad yet vague

The problems with Article 13 start with the definition of the services it applies to. While Article 13 is intended to address concerns about value distribution raised by a limited set of industries (primarily the music industry) it applies to all types of copyright protected works. But there is no good reason why an article that is intended to bolster that bargaining power of the music industry should impose expensive obligations on platforms that have nothing to do with hosting musical works. In addition, the limitation to platforms that deal with “large amounts” of works is so vague that it does not provide any legal certainty for smaller platforms and will undoubtedly give raise to court challenges. On the positive side the definition clearly limits the scope to for-profit services.

An approach hostile to innovation

In addition to the general exclusion of non-profit services from the scope, a number of other services are excluded (the green box). Services that fall into these categories do not have to comply with the obligations established by Article 13 and will fall under the existing liability regime of the E-Commerce directive that absolves them of liability as long as they have a working notice and take down policy in place. This is good news for the platforms that fall within these categories (such as wikipedia or github) but will severely limit the emergence of new types of services in Europe since most new platforms are not covered by the list of exceptions.

For those services that fall within its scope, Article 13 specifies that they perform an act of communication to the public when they give the public “access to copyright protected works or other protected subject matter uploaded by its users”. This rather technical language in paragraph 1 of the article changes the existing legal situation in which the law assumes that it is the users of these platforms who are publishing the works by uploading them. Article 13 specifies that the platform operators are publishing the works uploaded by the users. As a result it is the responsibility of the platforms to make sure that they have the authorisation from rightsholders to publish all works that are uploaded by their users.

Impossible obligations

Since platform operators by definition cannot know which works users will upload to their platforms they will need to have licenses for all copyrighted works that can possibly be uploaded to their platforms. Given the fact that there are countless copyrighted works in existence and that the large majority of creators is not interested in licensing their works this is an impossible obligation (only a small class of professional creators is offering their works for licensing). This creates a situation in which the platforms who cannot rely on the liability limitation of the E-Commerce directive and who are unable to obtain licenses for all works uploaded by their users face a very substantial risk of being held liable for copyright infringement.

Filters as the only way to avoid liability

The fact that it will be impossible for platforms to obtain licenses for all the works that their users could possibly upload is acknowledged in the text of Article 13. It introduces the so called “mitigation measures” that have been at the center of the discussion about Article 13 since last summer. Mitigation measures refers to measures that platforms need to implement in order not to be held liable for uploads by their users for which they have not obtained authorisation. Article 13(4) lists a total of 4 different measures that platforms have to implement, two of which do not apply smaller platforms that are younger than three years:

- All platforms have to make “best efforts” to license all copyrighted works uploaded by their users (the yellow box in the middle). We have already established that it is impossible to actually license all works, so a lot depends on how “best efforts” will be interpreted in practice. On paper this rather vague term is highly problematic since there is a virtually unlimited number of rights holders who could license their works to the platforms. It will be economically impossible for all but the biggest platforms to continue licenses with large numbers of rights holders. For all other platforms this provision will create substantial legal uncertainty (which will result in the need for lots of expensive legal advice which in turn will make it very difficult for smaller platforms to survive).

- In addition, all platforms will have to make “best efforts to take down works upon notice from rightsholders”. This provision re-introduces the notice and take down obligation that platforms currently have under the E-Commerce Directive and as such it is nothing new.

- On top of this all platform with more than 5 million monthly users will also need to implement a “notice and stay down” system (the top-most red box). This means that it will need to ensure that works that have been taken down after a notice from rightsholders cannot be re-uploaded to the platform. This requires platforms to implement filters that can recognise these works and filter them out. In terms of technology these filters will work the same as the more general upload filters introduced in the next step.

- Finally all platforms that exist for more than 3 years or that have more than €10 million in yearly revenue will need to make “best efforts to ensure the unavailability of specific works for which the rightsholders have provided the service providers with the relevant and necessary information”. At scale this obligation can only be achieved by implementing upload filters that block the upload of the works identified by rightsholders.

Taken together this means that only a small number of platforms (those that are less than 3 years old and have less than €10 million in revenue) will be temporarily excepted from the obligation to implement upload filters. Regardless of how often proponents of Article 13 stress that the final text does not contain the word “filters” there cannot be any doubt that adopting Article 13 will force almost all platforms in the EU to implement such filters.

“Protecting” user rights by way of wishful thinking

From the perspective of users the most problematic element of upload filters is their inability to distinguish between infringing uses of a work and non-infringing uses of the same work. As a result they will block substantial numbers of uploads that would be covered under exceptions and limitations to copyright and thus substantially limit freedom of expression. Article 13 recognises this and tries to address the problem by introducing user rights safeguards (the yellow box at the bottom) that specify that the measures “shall not result in the prevention of the availability of works uploaded by users which do not infringe copyright and related rights, including where such works or subject matter are covered by an exception or limitation”.

Unfortunately these user rights safeguards are nothing more than wishful thinking. At scale, implementing measures that prevent the availability of specific works means installing upload filters, but such filters are not able to distinguish between infringing and non-infringing uses of a work. Article 13 sets platforms up for failure because it is impossible to meet the obligations they have under the mitigation measures and to safeguard user rights at the same time. Not making best efforts on the mitigation measures carries a very concrete risk (damages for copyright infringements). As there is no equivalent risk for failing to ensure that user rights are not protected it is this part of the dual obligation that platforms will give up first. The proponents of Article 13 may be right when they claim that the article requires platforms to protect user rights (“your memes are safe”) but what they fail to acknowledge is that at the implementation level this is an obligation that is impossible to comply with.

It’s not too late to remove Article 13

Almost 30 months of discussions have not resulted in a resolution of this internal contradiction of Article 13. The final text is a terrible compromise that is disingenuous, user hostile and will result in significant legal uncertainty for the European internet economy. At the root of this problem is the idea that platforms should be forced license on more favourable terms by increasing their liability. As we have argued from the start, increasing the liability of platforms causes collateral damage, both among platforms have nothing to do with sharing music, and for the users of all platforms.

The final vote in the European Parliament at the end of this month offers one last chance to get rid of this legislative abomination. Removing Article 13 from the rest of the directive would allow some of the positive parts of the directive to go forward (and there is an increasing number of MEPs pledging to do this). If such an attempt fails, MEPs should reject the directive in its entirety.

—

Source of this article: https://www.communia-association.org/